Visualizing RDF Schema inferencing through Neo4J, Tinkerpop, Sail and Gephi

Last week, the Neo4J plugin for Gephi was released. Gephi is an open-source visualization and manipulation tool that allows users to interactively browse and explore graphs. The graphs themselves can be loaded through a variety of file formats. Thanks to Martin Škurla, it is now possible to load and lazily explore graphs that are stored in a Neo4J data store.

In one of my previous articles, I explained how Neo4J and the Tinkerpop framework can be used to load and query RDF triples. The newly released Neo4J plugin now allows to visually browse these RDF triples and perform some more fancy operations such as finding patterns and executing social network analysis algorithms from within Gephi itself. Tinkerpop’s Sail Ouplementation also supports the notion of RDF Schema inferencing. Inferencing is the process where new (RDF) data is automatically deducted from existing (RDF) data through reasoning. Unfortunately, the Sail reasoner cannot easily be integrated within Gephi, as the Gephi plugin grabs a lock on the Neo4J store and no RDF data can be added, except through the plugin itself.

Being able to visualize the RDF Schema reasoning process and graphically indicate which RDF triples were added manually and which RDF data was automatically inferred would be a nice to have. To implement this feature, we should be able to push graph changes from Tinkerpop and Neo4J to Gephi. Luckily, the Gephi graph streaming plugin allows us to do just that. In the rest of this article, I will detail how to setup the required Gephi environment and how we can stream (inferred) RDF data from Neo4J to Gephi.

1. Adding the (inferred) RDF data

Let’s start by setting up the required Neo4J/Tinkerpop/Sail environment that we will use to store and infer RDF triples. The setup is similar to the one explained in my previous Tinkerpop article. However, instead of wrapping our GraphSail as a SailRepository, we will wrap it as a ForwardChainingRDFSInferencer. This inferencer will listen for RDF triples that are added and/or removed and will automatically execute RDF Schema inferencing, applying the rules as defined by the RDF Semantics Recommendation.

We are now ready to add RDF triples. Let’s create a simple loop that allows us to read-in RDF triples and add them to the Sail store.

The inference method itself is rather simple. We first start by parsing the RDF subject, predicate and object. Next, we start a new transaction, add the statement and commit the transaction. This will not only add the RDF triple to our Neo4J store but will additionally run the RDF Schema inferencing process and automatically add the inferred RDF triples. Pretty easy!

But how do we retrieve the inferred RDF triples that were added through the inference process? Although the ForwardChainingRDFSInferencer allows us to register a listener that is able to detect changes to the graph, it does not provide the required API to distinct between the manually added or inferred RDF triples. Luckily, we can still access the underlying Neo4J store and capture these graph changes by implementing the Neo4J TransactionEventHandler interface. After a transaction is committed, we can fetch the newly created relationships (i.e. RDF triples). For each of these relationships, the start node (i.e. RDF subject), end node (i.e. RFD object) and relationship type (i.e. RDF predicate) can be retrieved. In case a RDF triple was added through inference, the value of the boolean property “inferred” is “true”. We filter the relationships to the ones that are defined within our domain (as otherwise the full RDFS meta model will be visualized as well). Finally we push the relevant nodes and edges.

2. Pushing the (inferred) RDF data

The streaming plugin for Gephi allows reading and visualizing data that is send to its master server. This master server is a REST interface that is able to receive graph data through a JSON interface. The PushUtility used in the PushTransactionEventHandler is responsible for generating the required JSON edge and node data format and pushing it to the Gephi master.

3. Visualizing the (inferred) RDF data

Start the Gephi Streaming Master server. This will allow Gephi to receive the (inferred) RDF triples that we send it through its REST interface. Let’s run our Java application and add the following RDF triples:

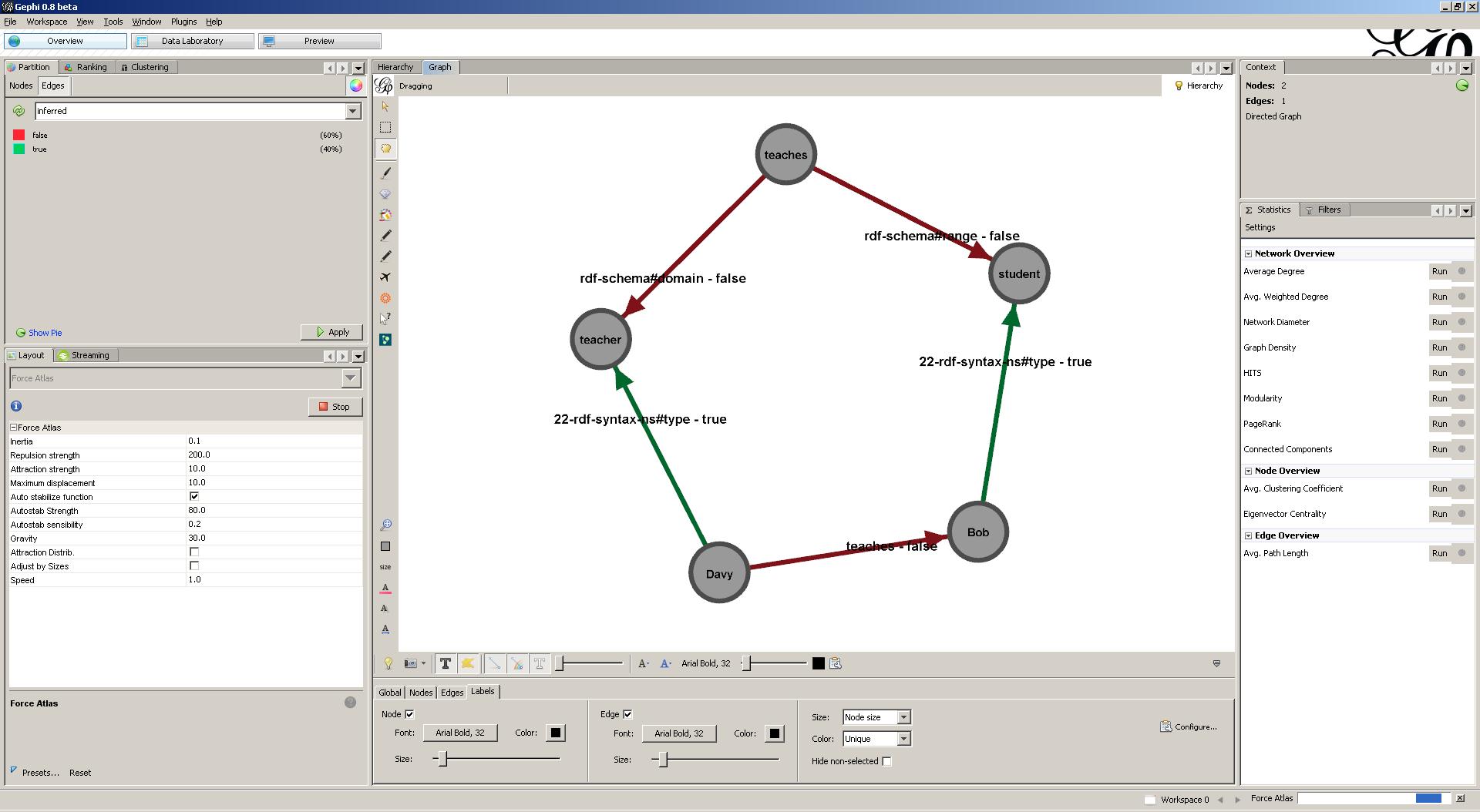

The first two RDF triples above state that a teacher teaches a student. The last RDF triple states that Davy teaches Bob. As a result, the RDF Schema inferencer deducts that Davy must be a teacher and that Bob must be a student. Let’s have a look at what Gephi visualized for us.

Mmm … That doesn’t really look impressive ![]() . Let’s use some formatting. First apply Force Atlas lay-outing. Afterwards, scale the edges and enable the labels on both the edges and the nodes. Finally, apply partitioning on the edges by coloring the arrows using the inferred property on the edges. We can now clearly identify the inferred RDF statements (i.e. Davy being a teacher and Bob being a student).

. Let’s use some formatting. First apply Force Atlas lay-outing. Afterwards, scale the edges and enable the labels on both the edges and the nodes. Finally, apply partitioning on the edges by coloring the arrows using the inferred property on the edges. We can now clearly identify the inferred RDF statements (i.e. Davy being a teacher and Bob being a student).

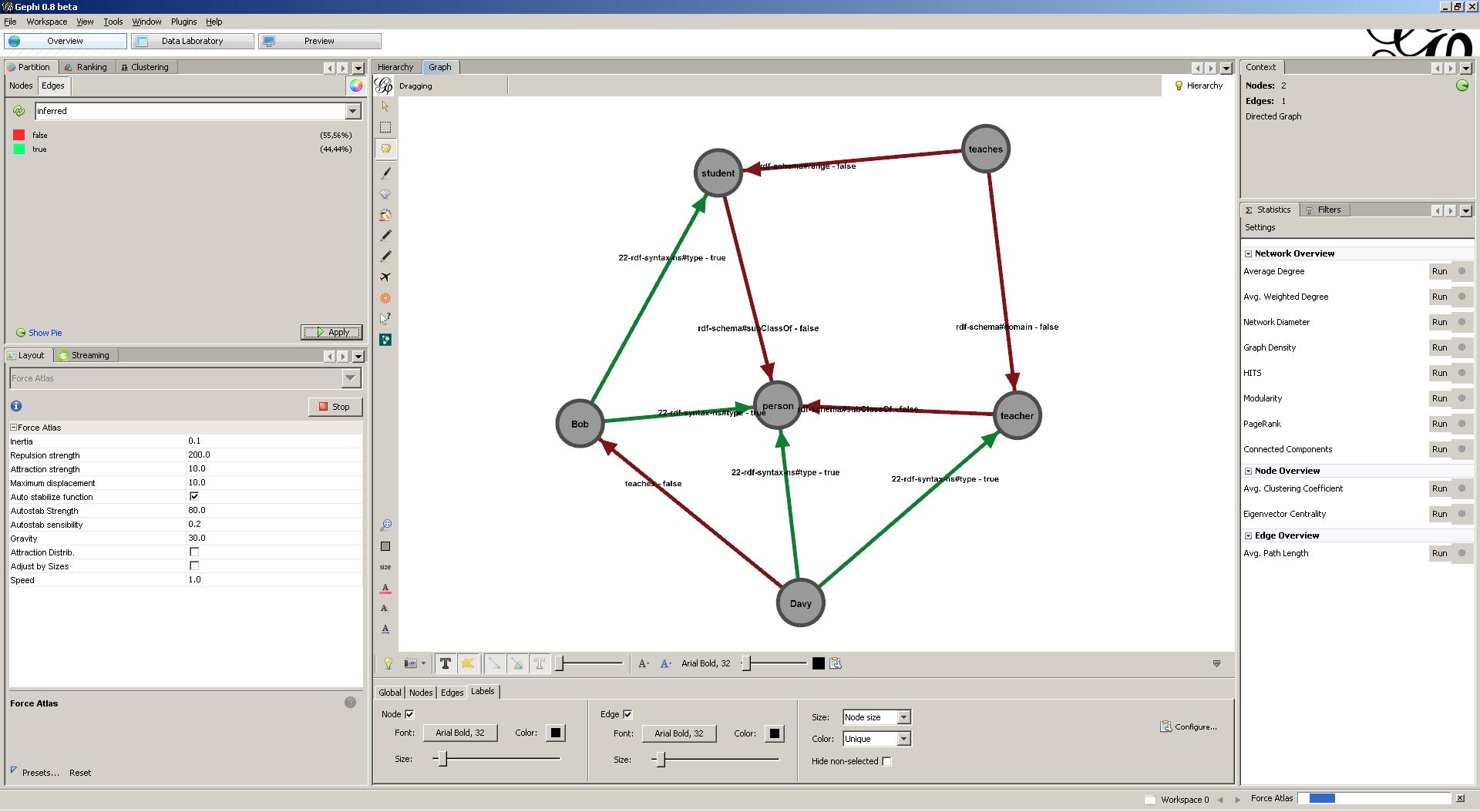

Let’s add some additional RDF triples.

Basically, these RDF triples state that both teacher and student are subclasses of person. As a result, the RDFS inferencer is able to deduct that both Davy and Bob must be persons. The Gephi visualization is updated accordingly.

4. Conclusion

With just a few lines of code we are able to stream (inferred) RDF triples to Gephi and make use of its powerful visualization and analysis tools to explore and inspect our datasets. As always, the complete source code can be found on the Datablend public GitHub repository. Make sure to surf the internet to find some other nice Gephi streaming examples, the coolest one probably being the visualization of the Egyptian revolution on Twitter.